Executive Summary

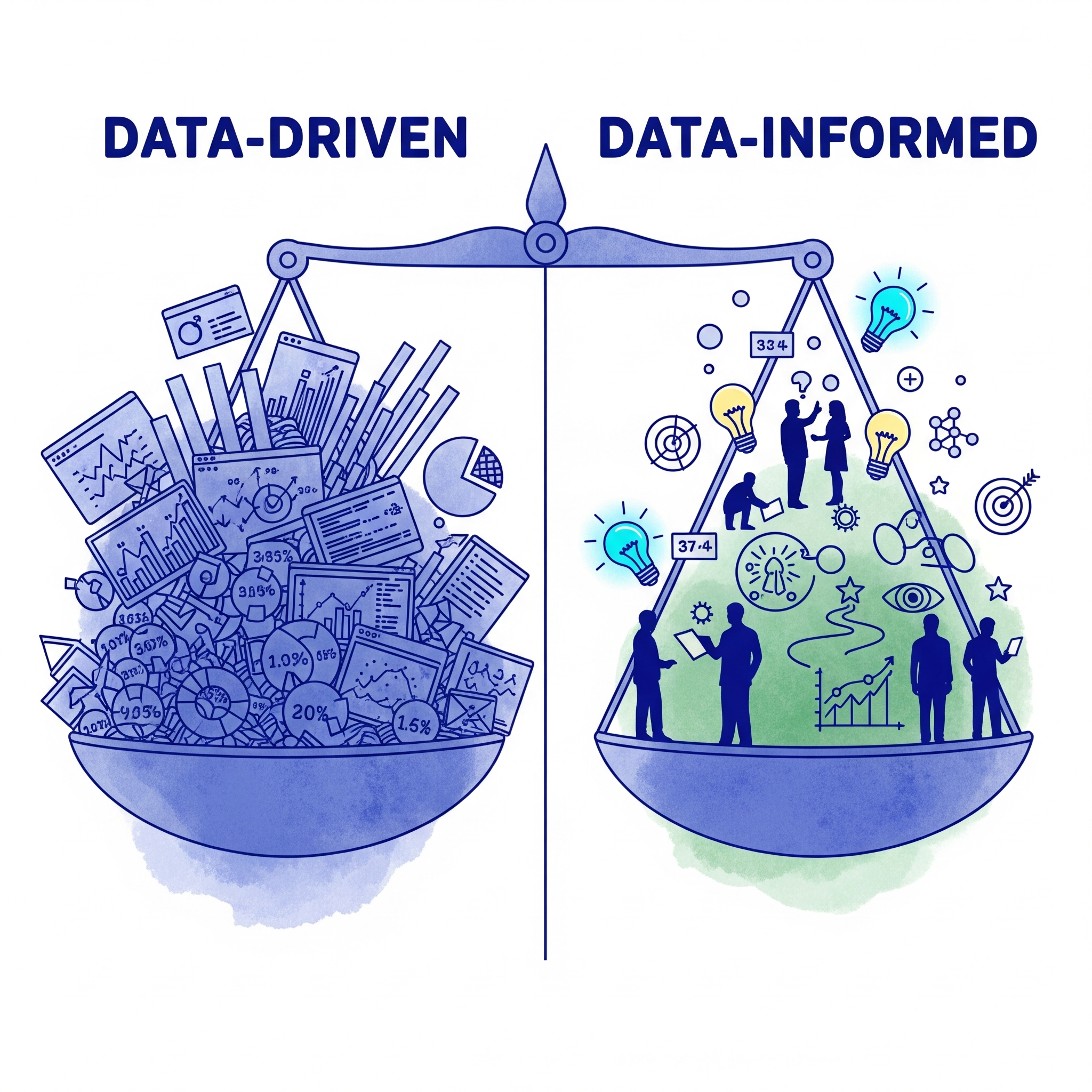

Product managers are drowning in data and analytics tools, creating a dangerous false belief that more data automatically leads to better decisions. This fosters a "data-driven" mindset that often under-values human context, strategic vision, and market intuition, leading to incrementally optimized products that miss breakthrough opportunities. This is different from the "data-informed" mindset, which takes the data into account, but balances it with judgment, insight, and a willingness to take calculated risks. Harvard Business Review research shows that organizations using data-informed approaches achieve 23% better product outcomes and 41% higher customer satisfaction compared to purely data-driven teams. The solution isn't less data but better balance—using data as input rather than directive while maintaining strategic context and developing judgment skills that complement analytical capabilities.

The Testing Mistake That Changed Everything

At one global company where I worked, the team was working hard to improve our test-driven culture. They had become obsessed with generating feature test ideas, launching experiments, and sharing learnings across the organization. It felt like we were finally getting scientific about product development, and we had the activity to show for it.

One particularly ambitious project came from our competitive intelligence team, who had been meticulously tracking one of our largest competitor's testing patterns. We were monitoring what tests they ran and whether they would productionalize the results over time. This seemed like the perfect data-driven strategy: be a fast follower of what the industry leader was doing.

Our logic was flawless. Our execution was systematic. But our results were terrible.

For months, the team failed to achieve positive results from tests that our competitor had apparently validated and implemented. They couldn't understand why. This company was an industry leader known for their testing methodologies. We were following their exact approach. The data should have worked.

Then someone made a discovery that changed how I thought about data forever.

Buried in our competitor's quarterly financial filings was a shocking admission: they had identified a critical bug in their testing software that had been showing winners as losers and losers as winners for an unknown amount of time. They were notifying investors that they were working to resolve the issue, going back through past tests, and reconciling the results. Their financial performance would be impacted for several quarters as they fixed the problem.

We had been copying a broken system for all this time.

This experience taught me something crucial about the difference between being data-driven and data-informed. Being data-driven meant we followed the numbers without questioning their context or validity. Being data-informed would have meant using data as one input while maintaining healthy skepticism and strategic judgment about what the numbers actually meant.

That testing mistake cost us millions in lost opportunities and misdirected resources. But it taught me something worth far more: data without judgment is just expensive guessing.

The Analytics Paradox Trapping Product Teams

The More Data, Less Wisdom Problem

Modern product teams have access to more data than ever before. We can track user behavior down to individual clicks, run sophisticated A/B tests, and generate predictive analytics that would have seemed like magic a decade ago. Yet MIT Decision Science Research shows that product managers who take in all that data but still balance quantitative analysis with human judgment make 34% fewer decision reversals and achieve 67% higher team confidence in strategic direction.

The paradox is real: teams with the most sophisticated analytics often make worse strategic decisions than teams that thoughtfully balance data with human insight.

This happens because pure data-driven approaches create several dangerous blind spots:

- Historical Bias: Data tells you what happened, not what's possible or what customers actually need

- Context Collapse: Metrics lose the human stories and strategic context that give them meaning

- Optimization Traps: Teams become obsessed with incremental improvements while missing breakthrough opportunities

- False Precision: Complex analytics create an illusion of certainty about inherently uncertain decisions

- Innovation Paralysis: If you can't measure it, you can't pursue it, even if it could be transformational

The Data-Driven Delusion

Many product teams mistake being busy with data for being strategic with insights. They create elaborate dashboards, run countless tests, and generate detailed reports while missing the fundamental question: what strategic decisions should we make based on this information?

Stanford Business School research shows that companies implementing data-informed frameworks report 45% improvement in breakthrough innovation rates and 28% better long-term market positioning compared to purely analytics-driven approaches.

The Three Pillars of Data-Informed Decision Making

Pillar 1: Data as Input, Not Directive

Strategic Context First

Data-informed approaches start with strategic questions, not available metrics. Before diving into analytics, effective product managers ask:

- What decision are we trying to make? Be specific about the choice you're facing

- What would we do if we had perfect information? Identify your strategic preference independent of current data limitations

- What assumptions are we testing? Separate what you're measuring from what you're actually trying to learn

- What context might the data miss? Consider human factors, market dynamics, or strategic opportunities that metrics can't capture

The Human Factor Analysis

For every significant product decision, implement mandatory "human factor" analysis alongside traditional metrics review:

- Customer Context: What do we know about customer needs and aspirations that goes beyond usage data?

- Market Context: What competitive or industry trends might influence how we should interpret these numbers?

- Strategic Context: How does this decision support or conflict with our long-term product vision?

- Team Context: What capabilities, constraints, or opportunities should influence how we act on this data?

Pillar 2: Judgment Skill Development

Building Analytical Intuition

Data-informed product management requires developing judgment skills that complement analytical capabilities:

Weekly Scenario Exercises: Practice interpreting the same data set through different strategic lenses. Ask "what if our strategic priority was X instead of Y?" and see how that changes what the data suggests.

Intuition Validation Processes: When your gut reaction conflicts with what the data suggests, dig deeper rather than automatically defaulting to metrics. Often the conflict reveals important context that data alone can't provide.

Alternative Interpretation Sessions: Regularly ask "what else could this data mean?" and explore multiple explanations for the same metrics before settling on a single conclusion.

Pillar 3: Balanced Analytics Architecture

Contextual Dashboard Design

Design analytics systems that highlight data alongside human context:

- Customer Story Integration: Include qualitative customer feedback and use cases alongside quantitative metrics

- Market Context Panels: Show industry trends and competitive intelligence alongside internal performance data

- Strategic Objective Tracking: Connect every metric to specific strategic goals so data interpretation stays mission-focused

- Decision History Documentation: Track the reasoning behind past decisions so you can evaluate both analytical accuracy and strategic judgment over time

Expert Perspectives on Data-Informed Leadership

Cassie Kozyrkov, Google's Chief Decision Scientist, captures the essential balance: "Data science is about turning data into actions. But actions require human judgment about what matters, what's possible, and what's worth doing."

DJ Patil, former U.S. Chief Data Scientist, emphasizes the forward-looking aspect: "The best data teams don't just analyze what happened; they help leaders understand what should happen next. That requires human wisdom, not just algorithmic output."

Hilary Mason, data strategy expert, highlights the limitations: "Data tells you what customers did. It doesn't tell you what they dreamed of doing, what they're afraid to try, or what they don't even know they want yet."

These insights reveal a critical truth: the most effective product managers don't choose between data and judgment. They develop the skill to combine both systematically for better strategic decisions.

Warning Signs You're Too Data-Driven

Decision-Making Red Flags:

- You feel uncomfortable making decisions when you don't have "enough" data

- Your team spends more time analyzing results than generating new hypotheses

- Strategic discussions get derailed by requests for more metrics and research

- You're surprised when competitors successfully launch features your data suggested wouldn't work

- Innovation initiatives struggle because they're difficult to measure in advance

Cultural Red Flags:

- People say "the data doesn't support that" more often than "what would that look like?"

- Teams avoid pursuing ideas that seem promising but are hard to quantify

- Success stories focus more on analytical sophistication than customer impact

- Meetings center around dashboard reviews rather than strategic discussions

- New initiatives require extensive data validation before getting any resources

Strategic Red Flags:

- Your product improvements are incremental even when market opportunities are substantial

- Competitors consistently surprise you with breakthrough features you "didn't see in the data"

- Customer feedback suggests needs that your analytics don't capture

- Long-term strategic bets feel risky because they can't be immediately measured

- Innovation feels like something you'll pursue "when you have better data"

If you recognize these patterns, you're likely trapped in data-driven thinking that's limiting your strategic potential.

The Implementation Framework: Building Data-Informed Capabilities

Phase 1: Decision Framework Development (Weeks 1-4)

Create Structured Decision Templates

Develop standardized approaches that require both quantitative evidence and qualitative context:

The Five-Factor Decision Framework:

- Quantitative Evidence: What do the metrics suggest about this opportunity or problem?

- Strategic Context: How does this align with our long-term product vision and market positioning?

- Customer Context: What do we know about customer needs and aspirations beyond usage data?

- Market Context: What competitive or industry dynamics should influence our interpretation?

- Execution Context: What capabilities, constraints, or opportunities affect how we should act?

Decision Documentation Process:

- Record both the analytical reasoning and intuitive factors that influenced each major product decision

- Track decision outcomes over 3-6 month periods to evaluate both data accuracy and judgment quality

- Create learning reviews that examine when data-informed approaches led to better outcomes than purely analytical approaches

Phase 2: Judgment Skill Building (Weeks 5-12)

Systematic Intuition Development

Monthly Scenario Planning: Present the same data set to your team with different strategic contexts and see how interpretations change. This builds skill at considering multiple valid conclusions from the same analytical evidence.

Periodic Data Skepticism Sessions: Choose one significant metric and spend 30 minutes exploring alternative explanations for what you're observing. Ask "what else could cause these numbers?" and "what context might we be missing?"

Quarterly Decision Audits: Review major product decisions from 3 months ago and evaluate whether data-informed approaches led to better outcomes than purely data-driven choices would have.

Phase 3: Balanced Analytics Implementation (Weeks 13-24)

Contextual Intelligence Systems

Enhanced Dashboard Architecture:

- Add customer story sections to every quantitative dashboard

- Include competitive intelligence and market context alongside internal metrics

- Connect every metric to specific strategic objectives so teams understand why they're measuring what they're measuring

Decision Support Tools:

- Create templates that require both analytical input and strategic reasoning for significant choices

- Implement decision tracking systems that capture both quantitative rationale and human judgment factors

- Establish regular reviews of decision quality that evaluate strategic outcomes, not just analytical accuracy

The Compound Advantage of Data-Informed Leadership

Building data-informed capabilities isn't just about making better individual decisions. It's about developing organizational wisdom that compounds over time.

When product teams systematically balance data with human judgment, several powerful effects emerge:

Strategic Confidence: Teams become comfortable making bold moves based on strong reasoning rather than perfect information, enabling faster innovation cycles.

Customer Empathy: Balancing quantitative metrics with qualitative insights creates deeper understanding of customer needs and aspirations beyond current behavior patterns.

Competitive Advantage: Organizations that combine analytical rigor with strategic intuition often see opportunities that purely data-driven competitors miss entirely.

Innovation Acceleration: Teams feel empowered to pursue breakthrough ideas that are difficult to measure in advance but could be transformational if successful.

Decision Quality: The combination of analytical evidence and human wisdom leads to more robust choices that perform better over time than either approach alone.

The question isn't whether you should use data in product decisions. The question is whether you're using data wisely as one input into strategic thinking rather than letting it become the only input into tactical optimization.

Key Takeaways:

- Data-informed approaches achieve 23% better product outcomes than purely data-driven teams

- MIT research shows balanced teams make 34% fewer decision reversals and achieve 67% higher confidence

- Data-driven approaches create blind spots: historical bias, context collapse, and innovation paralysis

- Three pillars: Data as Input (not directive), Judgment Skill Development, and Balanced Analytics Architecture

- Stanford shows data-informed frameworks improve breakthrough innovation by 45%

- The Five-Factor Decision Framework balances quantitative evidence with strategic, customer, market, and execution context

- Data-informed leadership creates compound advantages: strategic confidence, customer empathy, and competitive insight